DALL-E 2, OpenAI’s highly effective text-to-image AI system, can create pictures in the type of cartoonists, nineteenth century daguerreotypists, stop-motion animators and extra. But it surely has an essential, synthetic limitation: a filter that forestalls it from creating photos depicting public figures and content material deemed too poisonous.

Now an open supply different to DALL-E 2 is on the cusp of being launched, and it’ll haven’t any such filter.

London- and Los Altos-based startup Stability AI this week introduced the launch of a DALL-E 2-like system, Secure Diffusion, to simply over a thousand researchers forward of a public launch in the coming weeks. A collaboration between Stability AI, media creation firm RunwayML, Heidelberg College researchers and the analysis teams EleutherAI and LAION, Secure Diffusion is designed to run on most high-end client {hardware}, producing 512×512-pixel photos in only a few seconds given any textual content immediate.

Secure Diffusion pattern outputs. Picture Credit: Stability AI

“Secure Diffusion will enable each researchers and shortly the public to run this underneath a variety of circumstances, democratizing picture technology,” Stability AI CEO and founder Emad Mostaque wrote in a weblog put up. “We glance ahead to the open ecosystem that may emerge round this and additional fashions to actually discover the boundaries of latent house.”

However Secure Diffusion’s lack of safeguards in contrast to techniques like DALL-E 2 poses tough moral questions for the AI group. Even when the outcomes aren’t completely convincing but, making faux photos of public figures opens a big can of worms. And making the uncooked parts of the system freely accessible leaves the door open to dangerous actors who may prepare them on subjectively inappropriate content material, like pornography and graphic violence.

Creating Secure Diffusion

Secure Diffusion is the brainchild of Mostaque. Having graduated from Oxford with a Masters in arithmetic and pc science, Mostaque served as an analyst at numerous hedge funds earlier than shifting gears to extra public-facing works. In 2019, he co-founded Symmitree, a challenge that aimed to scale back the price of smartphones and web entry for individuals dwelling in impoverished communities. And in 2020, Mostaque was the chief architect of Collective & Augmented Intelligence In opposition to COVID-19, an alliance to assist policymakers make choices in the face of the pandemic by leveraging software program.

He co-founded Stability AI in 2020, motivated each by a private fascination with AI and what he characterised as an absence of “group” inside the open supply AI group.

A picture of former president Barack Obama created by Secure Diffusion. Picture Credit: Stability AI

“No person has any voting rights besides our 75 workers — no billionaires, large funds, governments or anybody else with management of the firm or the communities we help. We’re utterly impartial,” Mostaque advised TechCrunch in an e-mail. “We plan to use our compute to speed up open supply, foundational AI.”

Mostaque says that Stability AI funded the creation of LAION 5B, an open supply, 250-terabyte dataset containing 5.6 billion photos scraped from the web. (“LAION” stands for Massive-scale Synthetic Intelligence Open Community, a nonprofit group with the aim of creating AI, datasets and code accessible to the public.) The corporate additionally labored with the LAION group to create a subset of LAION 5B referred to as LAION-Aesthetics, which comprises AI-filtered photos ranked as significantly “stunning” by testers of Secure Diffusion.

The preliminary model of Secure Diffusion was based mostly on LAION-400M, the predecessor to LAION 5B, which was recognized to comprise depictions of intercourse, slurs and dangerous stereotypes. LAION-Aesthetics makes an attempt to appropriate for this, but it surely’s too early to inform to what extent it’s profitable.

A collage of photos created by Secure Diffusion. Picture Credit: Stability AI

In any case, Secure Diffusion builds on analysis incubated at OpenAI in addition to Runway and Google Mind, one in all Google’s AI R&D divisions. The system was educated on text-image pairs from LAION-Aesthetics to study the associations between written ideas and pictures, like how the phrase “chook” can refer not solely to bluebirds however parakeets and bald eagles, in addition to extra summary notions.

At runtime, Secure Diffusion — like DALL-E 2 — breaks the picture technology course of down right into a technique of “diffusion.” It begins with pure noise and refines a picture over time, making it incrementally nearer to a given textual content description till there’s no noise left in any respect.

Boris Johnson wielding numerous weapons, generated by Secure Diffusion. Picture Credit: Stability AI

Stability AI used a cluster of 4,000 Nvidia A100 GPUs working in AWS to prepare Secure Diffusion over the course of a month. CompVis, the machine imaginative and prescient and studying analysis group at Ludwig Maximilian College of Munich, oversaw the coaching, whereas Stability AI donated the compute energy.

Secure Diffusion can run on graphics playing cards with round 5GB of VRAM. That’s roughly the capability of mid-range playing cards like Nvidia’s GTX 1660, priced round $230. Work is underway on bringing compatibility to AMD MI200’s knowledge heart playing cards and even MacBooks with Apple’s M1 chip (though in the case of the latter, with out GPU acceleration, picture technology will take so long as a couple of minutes).

“Now we have optimized the mannequin, compressing the data of over 100 terabytes of photos,” Mosaque mentioned. “Variants of this mannequin will be on smaller datasets, significantly as reinforcement studying with human suggestions and different methods are used to take these normal digital brains and make then even smaller and targeted.”

Samples from Secure Diffusion. Picture Credit: Stability AI

For the previous few weeks, Stability AI has allowed a restricted variety of customers to question the Secure Diffusion mannequin via its Discord server, slowing rising the variety of most queries to stress-test the system. Stability AI says that greater than 15,000 testers have used Secure Diffusion to create 2 million photos a day.

Far-reaching implications

Stability AI plans to take a twin method in making Secure Diffusion extra extensively accessible. It’ll host the mannequin in the cloud, permitting individuals to proceed utilizing it to generate photos with out having to run the system themselves. As well as, the startup will launch what it calls “benchmark” fashions underneath a permissive license that may be used for any goal — business or in any other case — in addition to compute to prepare the fashions.

That may make Stability AI the first to launch a picture technology mannequin almost as high-fidelity as DALL-E 2. Whereas different AI-powered picture turbines have been accessible for a while, together with Midjourney, NightCafe and Pixelz.ai, none have open sourced their frameworks. Others, like Google and Meta, have chosen to hold their applied sciences underneath tight wraps, permitting solely choose customers to pilot them for slender use instances.

Stability AI will become profitable by coaching “personal” fashions for purchasers and appearing as a normal infrastructure layer, Mostaque mentioned — presumably with a delicate therapy of mental property. The corporate claims to produce other commercializable initiatives in the works, together with AI fashions for producing audio, music and even video.

Sand sculptures of Harry Potter and Hogwarts, generated by Secure Diffusion. Picture Credit: Stability AI

“We are going to present extra particulars of our sustainable enterprise mannequin quickly with our official launch, however it’s mainly the business open supply software program playbook: providers and scale infrastructure,” Mostaque mentioned. “We expect AI will go the manner of servers and databases, with open beating proprietary techniques — significantly given the ardour of our communities.”

With the hosted model of Secure Diffusion — the one accessible via Stability AI’s Discord server — Stability AI doesn’t allow each form of picture technology. The startup’s phrases of service ban some lewd or sexual materials (though not scantily-clad figures), hateful or violent imagery (similar to antisemitic iconography, racist caricatures, misogynistic and misandrist propaganda), prompts containing copyrighted or trademarked materials, and private data like telephone numbers and Social Safety numbers. However whereas Stability AI has carried out a key phrase filter in the server related to OpenAI’s, which prevents the mannequin from even trying to generate a picture that may violate the utilization coverage, it seems to be extra permissive than most.

(A earlier model of this text implied that Stability AI wasn’t utilizing a key phrase filter. That’s not the case; TechCrunch regrets the error.)

A Secure Diffusion technology, given the immediate: “very horny girl with black hair, pale pores and skin, in bikini, moist hair, sitting on the seashore.” Picture Credit: Stability AI

Stability AI additionally doesn’t have a coverage in opposition to photos with public figures. That presumably makes deepfakes truthful sport (and Renaissance-style work of well-known rappers), although the mannequin struggles with faces at occasions, introducing odd artifacts {that a} expert Photoshop artist not often would.

“Our benchmark fashions that we launch are based mostly on normal net crawls and are designed to symbolize the collective imagery of humanity compressed into information a couple of gigabytes large,” Mostaque mentioned. “Apart from unlawful content material, there may be minimal filtering, and it’s on the person to use it as they are going to.”

A picture of Hitler generated by Secure Diffusion. Picture Credit: Stability AI

Probably extra problematic are the soon-to-be-released instruments for creating customized and fine-tuned Secure Diffusion fashions. An “AI furry porn generator” profiled by Vice gives a preview of what would possibly come; an artwork pupil going by the title of CuteBlack educated a picture generator to churn out illustrations of anthropomorphic animal genitalia by scraping paintings from furry fandom websites. The chances don’t cease at pornography. In idea, a malicious actor may fine-tune Secure Diffusion on photos of riots and gore, as an illustration, or propaganda.

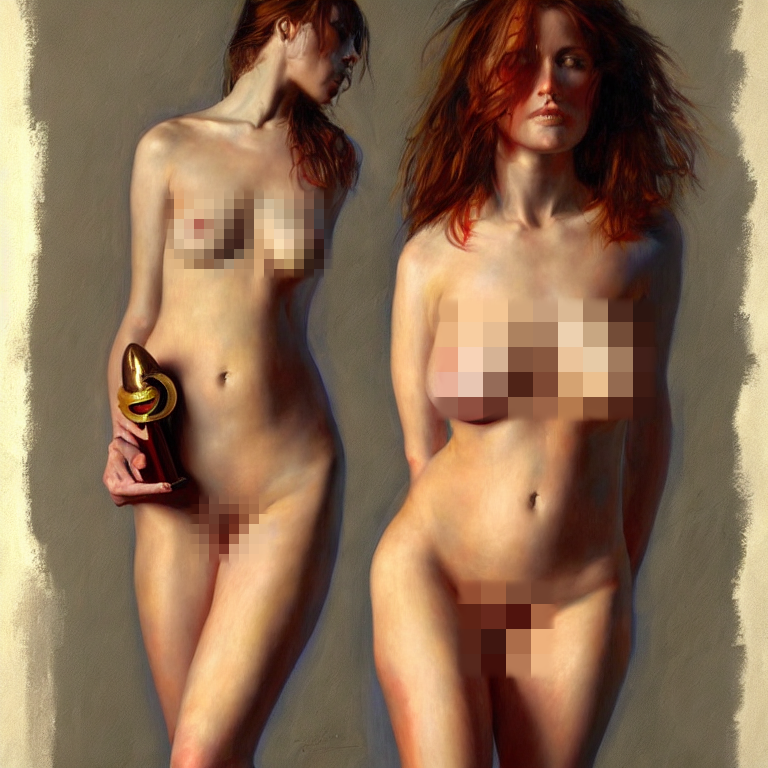

Already, testers in Stability AI’s Discord server are utilizing Secure Diffusion to generate a variety of content material disallowed by different picture technology providers, together with photos of the battle in Ukraine, nude ladies, an imagined Chinese language invasion of Taiwan and controversial depictions of spiritual figures like the Prophet Muhammad. Probably, a few of these photos are in opposition to Stability AI’s personal phrases, however the firm is presently counting on the group to flag violations. Many bear the telltale indicators of an algorithmic creation, like disproportionate limbs and an incongruous mixture of artwork types. However others are satisfactory on first look. And the tech will proceed to enhance, presumably.

Nude ladies generated by Secure Diffusion. Picture Credit: Stability AI

Mostaque acknowledged that the instruments may be utilized by dangerous actors to create “actually nasty stuff,” and CompVis says that the public launch of the benchmark Secure Diffusion mannequin will “incorporate moral issues.” However Mostaque argues that — by making the instruments freely accessible — it permits the group to develop countermeasures.

“We hope to be the catalyst to coordinate international open supply AI, each impartial and tutorial, to construct important infrastructure, fashions and instruments to maximize our collective potential,” Mostaque mentioned. “That is superb expertise that may remodel humanity for the higher and may be open infrastructure for all.”

A technology from Secure Diffusion, with the immediate: “[Ukrainian president Volodymyr] Zelenskyy dedicated crimes in Bucha.” Picture Credit: Stability AI

Not everybody agrees, as evidenced by the controversy over “GPT-4chan,” an AI mannequin educated on one in all 4chan’s infamously poisonous dialogue boards. AI researcher Yannic Kilcher made GPT-4chan — which realized to output racist, antisemitic and misogynist hate speech — accessible earlier this 12 months on Hugging Face, a hub for sharing educated AI fashions. Following discussions on social media and Hugging Face’s remark part, the Hugging Face group first “gated” entry to the mannequin earlier than eradicating it altogether, however not earlier than it was downloaded greater than a thousand occasions.

“Warfare in Ukraine” photos generated by Secure Diffusion. Picture Credit: Stability AI

Meta’s current chatbot fiasco illustrates the problem of maintaining even ostensibly protected fashions from going off the rails. Simply days after making its most superior AI chatbot to date, BlenderBot 3, accessible on the net, Meta was pressured to confront media stories that the bot made frequent antisemitic feedback and repeated false claims about former U.S. President Donald Trump profitable reelection two years in the past.

The writer of AI Dungeon, Latitude, encountered the same content material downside. Some gamers of the text-based journey sport, which is powered by OpenAI’s text-generating GPT-3 system, noticed that it could typically carry up excessive sexual themes, together with pedophelia — the results of fine-tuning on fiction tales with gratuitous intercourse. Going through strain from OpenAI, Latitude carried out a filter and began robotically banning avid gamers for purposefully prompting content material that wasn’t allowed.

BlenderBot 3’s toxicity got here from biases in the public web sites that had been used to prepare it. It’s a well known downside in AI — even when fed filtered coaching knowledge, fashions have a tendency to amplify biases like picture units that painting males as executives and ladies as assistants. With DALL-E 2, OpenAI has tried to fight this by implementing methods, together with dataset filtering, that assist the mannequin generate extra “numerous” photos. However some customers declare that they’ve made the mannequin much less correct than earlier than at creating photos based mostly on sure prompts.

Secure Diffusion comprises little in the manner of mitigations moreover coaching dataset filtering. So what’s to forestall somebody from producing, say, photorealistic photos of protests, “proof” of pretend moon landings and normal misinformation? Nothing actually. However Mostaque says that’s the level.

Given the immediate “protests in opposition to the dilma authorities, brazil [sic],” Secure Diffusion created this picture. Picture Credit: Stability AI

“A share of individuals are merely disagreeable and peculiar, however that’s humanity,” Mostaque mentioned. “Certainly, it’s our perception this expertise will be prevalent, and the paternalistic and considerably condescending angle of many AI aficionados is misguided in not trusting society … We’re taking important security measures together with formulating cutting-edge instruments to assist mitigate potential harms throughout launch and our personal providers. With a whole bunch of 1000’s creating on this mannequin, we’re assured the web profit will be immensely constructive and as billions use this tech harms will be negated.”